Blog's Page

Blog's Page

Cache Or Cache Memory Refers To A Type Of High-speed Memory Used By Computers And Other Electronic Devices To Temporarily Store Frequently Accessed Data Or Instructions.

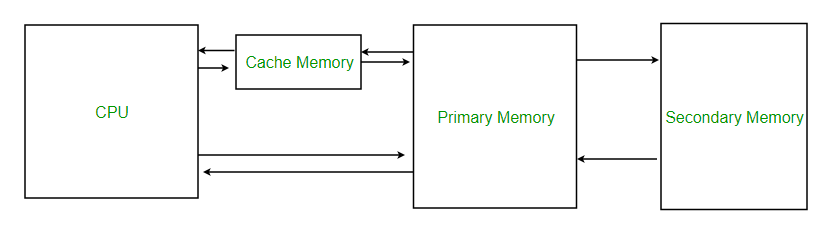

When A Computer Performs A Task, It Typically Accesses Data And Instructions From Its Main Memory (RAM). However, Accessing Data From Main Memory Can Be Slow Because It Requires The Processor To Wait For The Data To Be Retrieved From Storage. Cache Memory Addresses This Issue By Storing Frequently Accessed Data And Instructions In A Small, High-speed Memory That Can Be Accessed More Quickly Than Main Memory.

Cache Memory Is Typically Located Closer To The Processor Than Main Memory, Allowing It To Be Accessed More Quickly. There Are Different Levels Of Cache Memory, With Each Level Being Larger And Slower Than The Previous Level But Still Faster Than Main Memory. The Most Commonly Used Types Of Cache Memory In Modern Computers Are L1, L2, And L3 Caches.

By Using Cache Memory, Computers Can Improve Their Performance By Reducing The Time It Takes To Access Frequently Used Data And Instructions. However, Cache Memory Is Limited In Size, And It Can Only Store A Portion Of The Data And Instructions That The Computer May Need To Access, So It Must Be Managed Carefully To Ensure That The Most Important Data Is Stored In Cache Memory.

There Are Typically Three Types Or Levels Of Cache Memory Used In Modern Computer Systems: L1, L2, And L3 Cache. Each Level Of Cache Memory Has Its Own Characteristics And Serves A Different Purpose In The Computer's Memory Hierarchy.

L1 Cache: L1 Cache, Also Known As Primary Cache, Is The Smallest And Fastest Cache Memory In The Computer's Memory Hierarchy. It Is Located Within The Processor And Provides The Fastest Access To Frequently Used Data And Instructions. L1 Cache Is Divided Into Separate Instruction And Data Caches.

L2 Cache: L2 Cache, Also Known As Secondary Cache, Is Larger But Slower Than L1 Cache. It Is Typically Located On The Processor Or On A Separate Chip, And It Provides A Larger Cache For Frequently Used Data And Instructions. L2 Cache Is Shared Between Multiple Cores In A Multi-core Processor.

L3 Cache: L3 Cache, Also Known As Tertiary Cache, Is The Largest But Slowest Cache In The Computer's Memory Hierarchy. It Is Typically Located On A Separate Chip, And It Is Shared Among All Cores In A Multi-core Processor. L3 Cache Provides A Larger Cache For Frequently Used Data And Instructions Than L2 Cache.

In Addition To These Three Types Of Cache Memory, Some Computer Systems May Also Have Other Types Of Cache Memory, Such As Level 4 (L4) Cache Or Non-volatile Cache, Which Is A Type Of Cache That Retains Data Even When The Power Is Turned Off. However, These Types Of Cache Memory Are Less Common And Are Typically Used Only In Specialized Computing Environments.

An Example Of Cache Memory Is The Cache Found On A Modern Processor, Such As An Intel Core I7 Processor.

For Example, An Intel Core I7 Processor Typically Has 3 Levels Of Cache Memory: L1, L2, And L3. The L1 Cache Is Divided Into Separate Instruction And Data Caches, Each With A Capacity Of 32KB Per Core. The L2 Cache Has A Capacity Of Up To 256KB Per Core, And The L3 Cache Has A Capacity Of Up To 16MB Shared Among All Cores.

When The Processor Needs To Access Data Or Instructions From Memory, It First Checks The L1 Cache For The Requested Data. If The Data Is Not Found In The L1 Cache, The Processor Checks The L2 Cache, And So On Until The Data Is Found Or Until It Reaches Main Memory.

By Storing Frequently Accessed Data And Instructions In Cache Memory, The Processor Can Access The Data More Quickly, Improving Overall System Performance.

Cache Is Faster Than RAM Because It Is Located Closer To The Processor And Has Faster Access Times.

Cache Memory Is Typically Located Within Or Very Close To The Processor, Which Means That It Can Be Accessed Much More Quickly Than RAM, Which Is Typically Located Farther Away From The Processor. In Addition, Cache Memory Is Designed To Have Very Fast Access Times, With Typical Access Times Measured In Nanoseconds (ns), Compared To The Slower Access Times Of RAM, Which Are Typically Measured In Tens Or Hundreds Of Nanoseconds.

Another Factor That Makes Cache Memory Faster Than RAM Is Its Smaller Size. Cache Memory Is Designed To Be Smaller And More Expensive Per Unit Of Storage Than RAM. This Allows For Faster Access Times, But Also Means That Cache Memory Can Only Hold A Small Amount Of Data Compared To RAM.

Overall, Cache Memory Is Faster Than RAM Because Of Its Close Proximity To The Processor And Its Smaller Size, Which Allows For Faster Access Times. However, Cache Memory Is More Expensive Than RAM And Has Limited Capacity, So It Is Used Primarily To Store Frequently Accessed Data And Instructions, While RAM Is Used For Storing Larger Amounts Of Data And Running Applications.

Cache Memory Works By Storing Frequently Accessed Data And Instructions In A Small, High-speed Memory That Can Be Accessed More Quickly Than Main Memory. When The Processor Needs To Access Data Or Instructions, It First Checks The Cache Memory To See If The Requested Data Is Already Stored There. If The Data Is Found In The Cache Memory, It Is Quickly Accessed And Used By The Processor. If The Data Is Not Found In The Cache Memory, The Processor Fetches It From The Main Memory And Stores A Copy Of It In The Cache Memory For Future Use.

Cache Memory Works On The Principle Of Locality, Which States That Programs Tend To Access A Relatively Small Portion Of Their Address Space At Any Given Time, And That Nearby Memory Locations Are More Likely To Be Accessed Than Faraway Memory Locations. Cache Memory Takes Advantage Of This Principle By Storing Recently Accessed Data And Instructions, As Well As Nearby Data And Instructions That Are Likely To Be Accessed In The Near Future.

Cache Memory Is Organized Into Multiple Levels, With Each Level Being Larger And Slower Than The Previous Level But Still Faster Than Main Memory. The Most Commonly Used Types Of Cache Memory In Modern Computers Are L1, L2, And L3 Caches. When The Processor Needs To Access Data Or Instructions, It First Checks The L1 Cache, Then The L2 Cache, And So On Until The Data Is Found Or Until It Reaches Main Memory.

By Using Cache Memory, Computers Can Improve Their Performance By Reducing The Time It Takes To Access Frequently Used Data And Instructions. However, Cache Memory Is Limited In Size, And It Can Only Store A Portion Of The Data And Instructions That The Computer May Need To Access, So It Must Be Managed Carefully To Ensure That The Most Important Data Is Stored In Cache Memory.

Cache Memory Is Stored In A Small, High-speed Memory That Is Integrated With The Processor Or Located Close To It On The Motherboard. The Exact Way That Cache Memory Is Stored Depends On The Type Of Cache Memory Being Used And The Specific Implementation Of The Computer System.

The Most Commonly Used Types Of Cache Memory In Modern Computers Are L1, L2, And L3 Caches. L1 Cache Is Typically Implemented As A Set Of Fast SRAM (Static Random Access Memory) Cells That Are Integrated Into The Processor Itself. L2 Cache Is Often Implemented As A Separate SRAM Chip That Is Located On The Motherboard Close To The Processor. L3 Cache May Also Be Implemented As A Separate SRAM Chip, But It Is Typically Located Farther Away From The Processor And May Be Shared Among Multiple Processor Cores.

Cache Memory Is Organized Into Blocks Or Lines Of Data, With Each Block Typically Containing Multiple Words Or Bytes Of Data. When Data Is Read From Main Memory, It Is Typically Loaded Into A Cache Block Or Line, Along With Additional Data That Is Nearby In Memory. This Is Known As Cache Line Filling Or Cache Line Loading.

Cache Memory Is Managed By The Computer's Memory Controller, Which Is Responsible For Determining Which Data Should Be Stored In Cache Memory And When Data Should Be Evicted From Cache Memory To Make Room For New Data. The Memory Controller Uses Sophisticated Algorithms To Predict Which Data Is Likely To Be Accessed Next And To Ensure That The Most Important Data Is Stored In Cache Memory.

Overall, Cache Memory Is Stored In A Small, High-speed Memory That Is Integrated With Or Located Close To The Processor. The Exact Implementation Of Cache Memory Depends On The Specific Computer System And The Type Of Cache Memory Being Used.

Cache Memory Is Volatile Memory, Meaning That It Requires Power To Maintain Its Contents. When Power Is Removed, The Contents Of Cache Memory Are Lost. This Is In Contrast To Non-volatile Memory, Such As Hard Disk Drives Or Solid-state Drives, Which Retain Their Contents Even When Power Is Removed.

The Reason Cache Memory Is Designed As Volatile Memory Is Because Its Primary Purpose Is To Improve The Performance Of The Computer System By Providing A Fast, Temporary Storage Area For Frequently Accessed Data And Instructions. By Keeping This Data In Volatile Memory, The Computer Can Access It More Quickly Than If It Had To Retrieve It From A Slower, Non-volatile Storage Device Like A Hard Drive.

However, It Is Important To Note That Modern Computer Systems Often Include Other Types Of Non-volatile Memory, Such As Flash Memory Or NVRAM (non-volatile Random Access Memory), That Can Be Used To Store Data That Needs To Be Retained Even When Power Is Removed. These Types Of Non-volatile Memory Are Typically Used For Storing Data Like The BIOS Settings, Firmware Updates, Or Other Configuration Information That Needs To Persist Even When The Computer Is Turned Off.

Cache Memory, Definition Of Cache Memory, Types Of| Links1 | Links2 | Links3 | Products | Social Links |

|---|---|---|---|---|

| Home | Blog | Sitemap | Email Checker Tool | |

| About | CSI Links | ISRO Project Code: AA0802 | Offers | |

| Disclaimer | Gallery | Contact Us | Antivirus | |

| Privacy Policy | Software Downloads |